Location Detection

Data Preparation

Prepare Training Images Collect images of different types of objects that need to be localized by the model, ensuring the model can recognize various forms of targets. It is recommended that these images account for more than 80% of the total image set.

Prepare Test Set Collect images of different types of objects that need to be localized by the model, ensuring the model can recognize various forms of targets. It is recommended that these images account for less than 20% of the total image set.

TIP

- If the number of images exceeds 200, please compress them into a zip file. The zip file should not exceed 5GB.

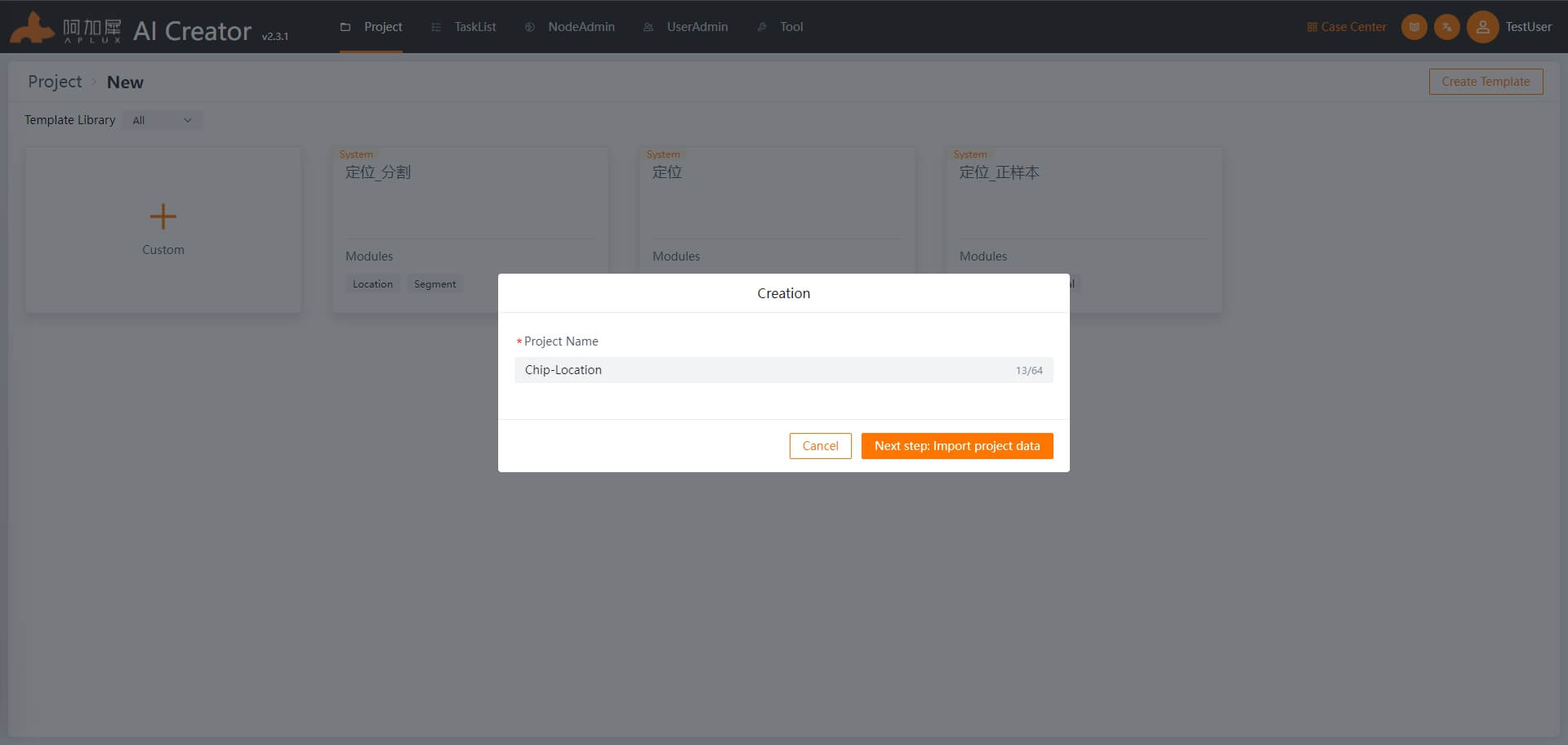

Create Project

Log in to AI Creator and click the "New Project" button in the project center.

Click "Custom Project" then in the popup window, enter the project name "Food Packaging - Character Recognition" Click "Next: Import Project Data" to complete the project creation.

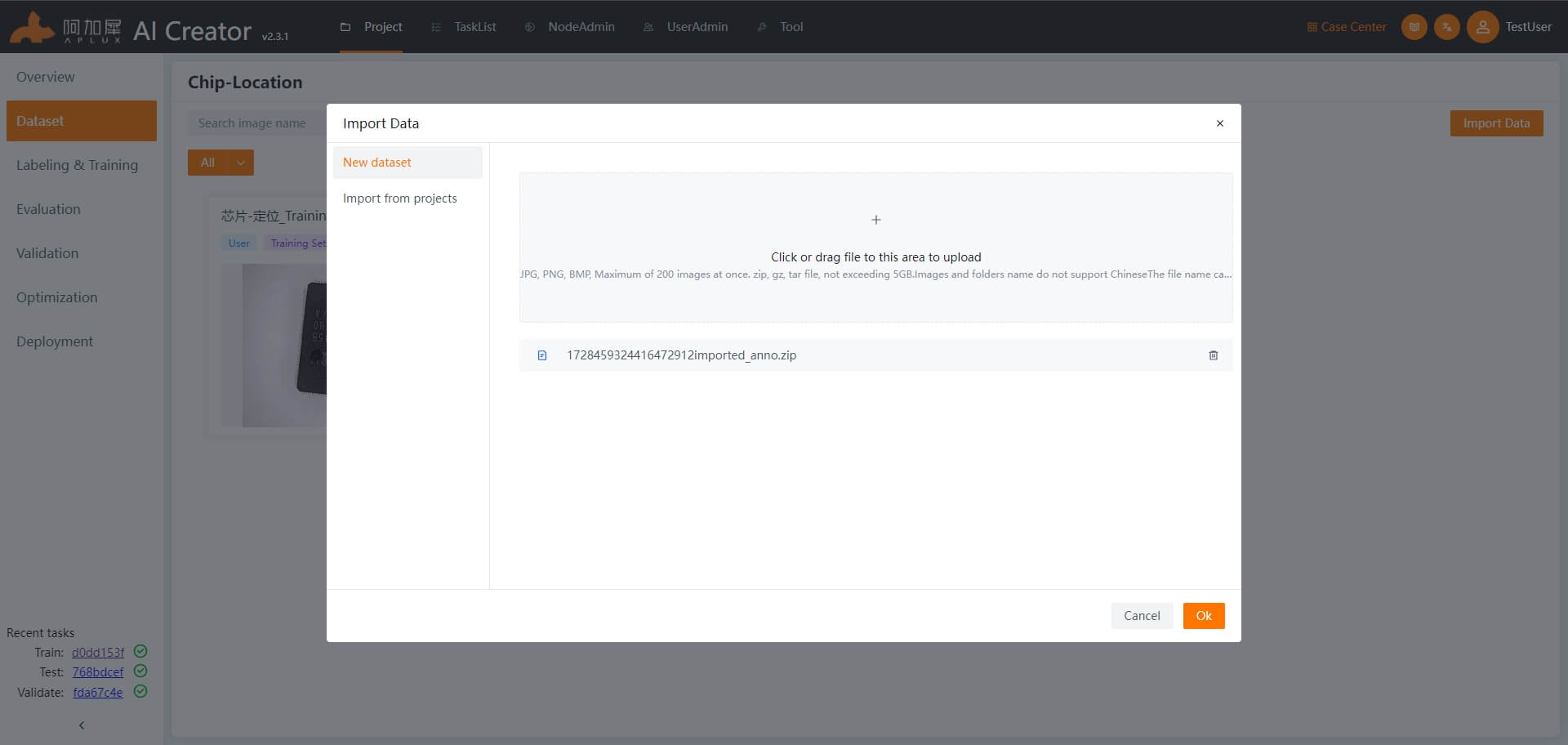

Import Data

- Click on the "Dataset" tab in the left sidebar.

- Upload the training and test image sets. The project creation will automatically create dataset and training dataset folders. Click into the training set or test set. In both sets, click "Import Images" -> "Create New Dataset" then drag the images or compressed files into the upload area. Click "OK" to import the data.

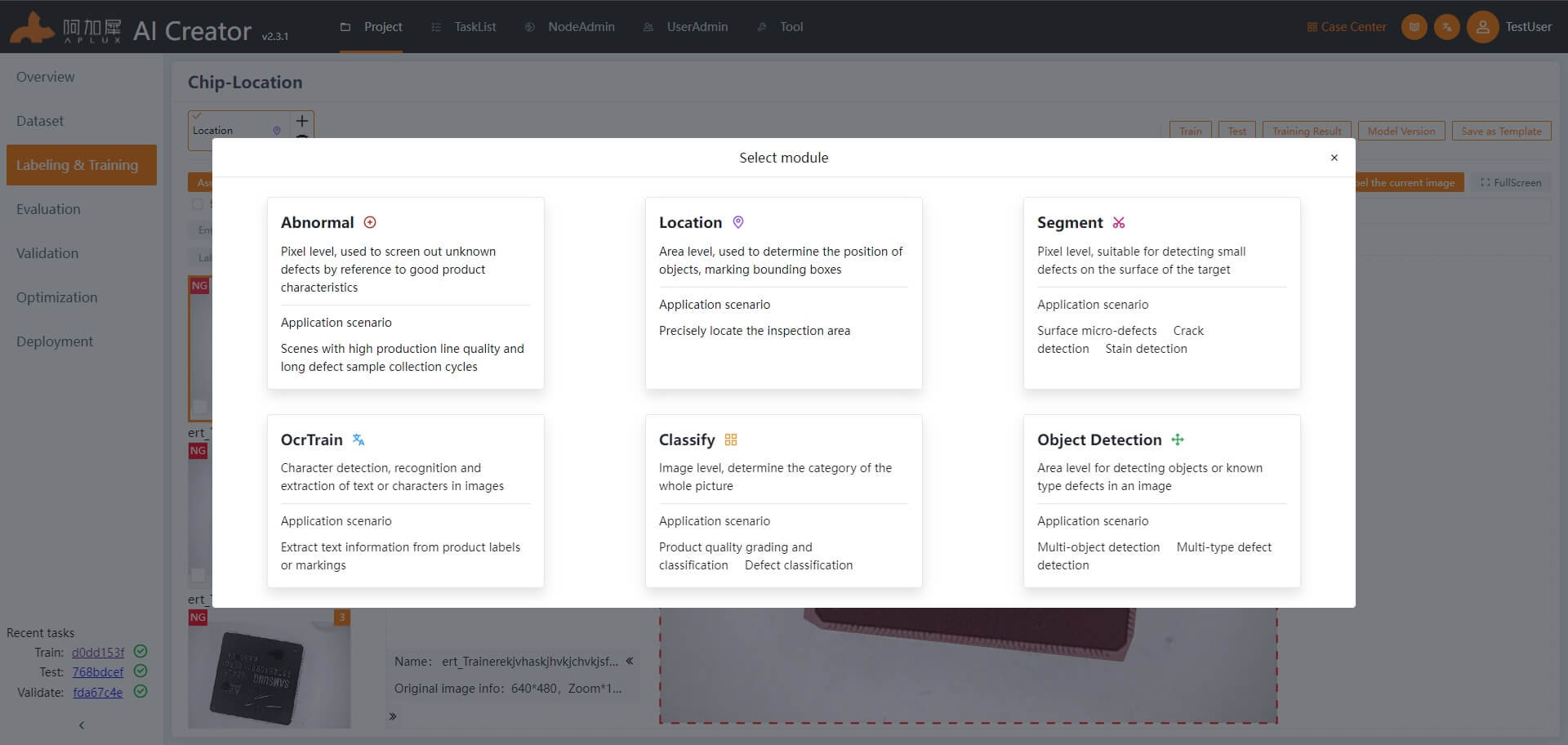

Add Algorithm Module

- Click on the "Label & Train" tab in the left sidebar.

- Click "Add Module" and in the module selection window, choose "Location"

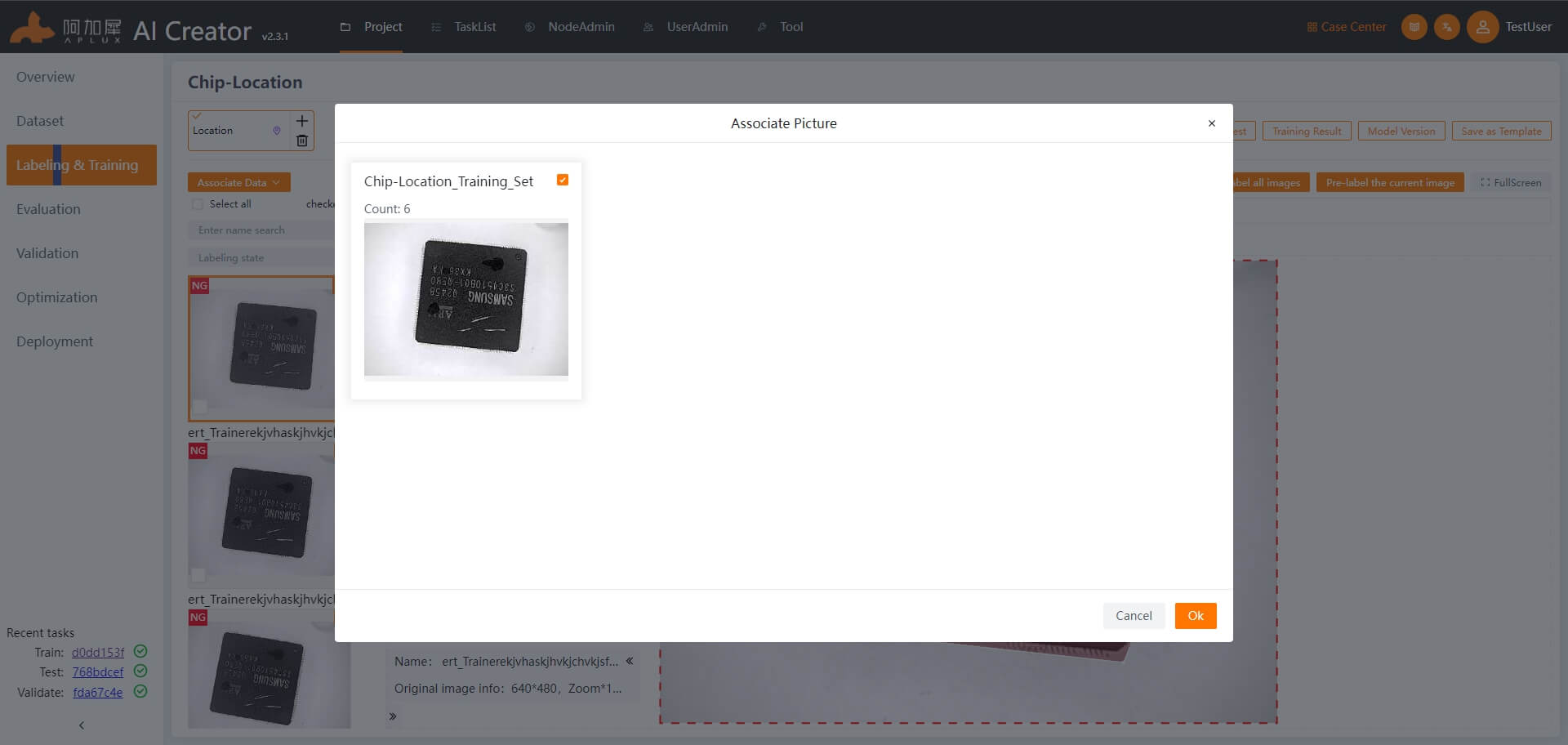

Associate Images

Click the "Associate Data" dropdown menu, select "Associate Images" In the popup window, select the training set, and click "OK"

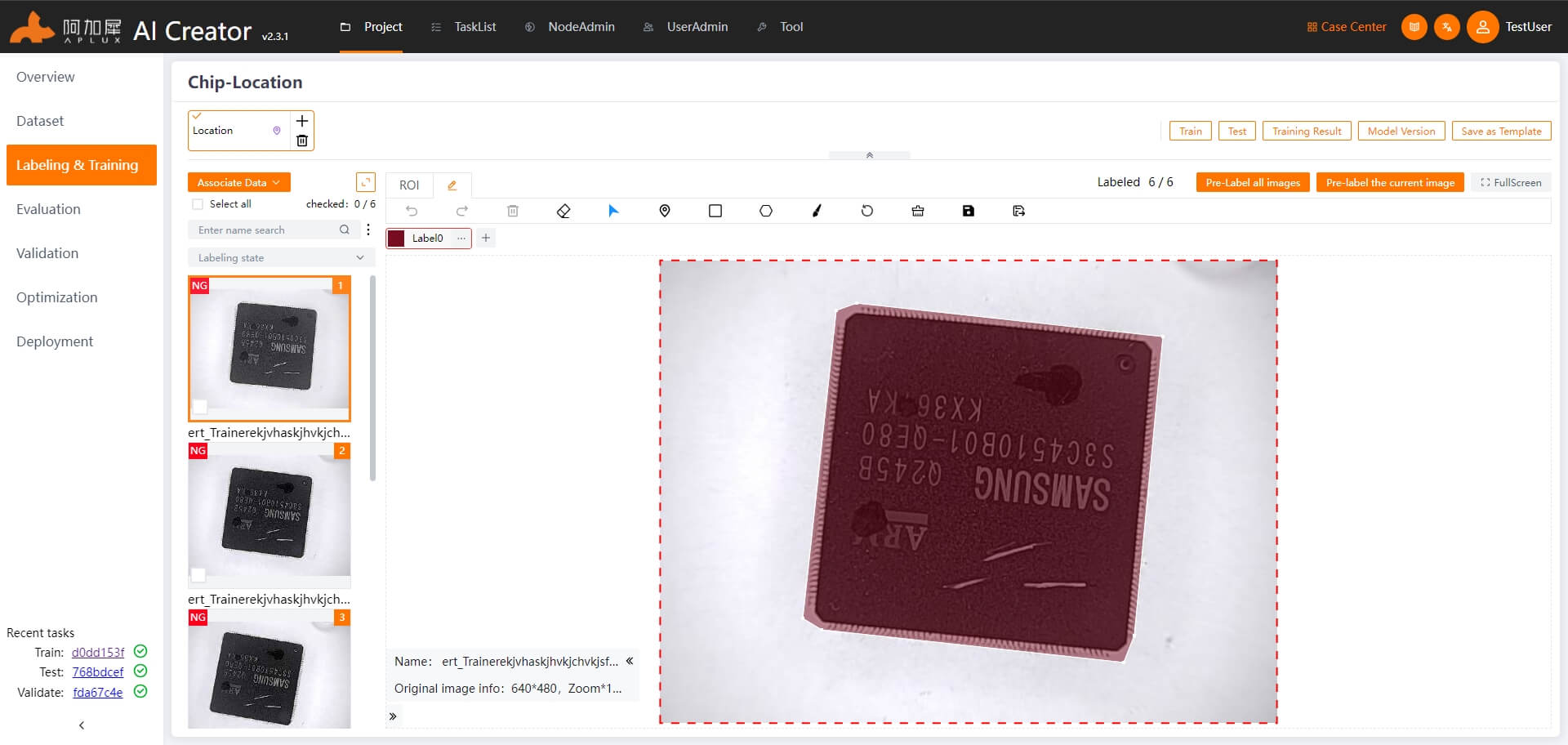

Data Labeling

- In the left sidebar, click "Label & Train"

- Label the data.

Use the labeling tools (Rectangle, Polygon, Brush) to mark the targets that need to be localized, and save the annotation information for the entire image (shortcut: Ctrl+Shift+S).

TIP

- If there are multiple areas that need location, repeat the labeling process for all areas before saving the annotation information.

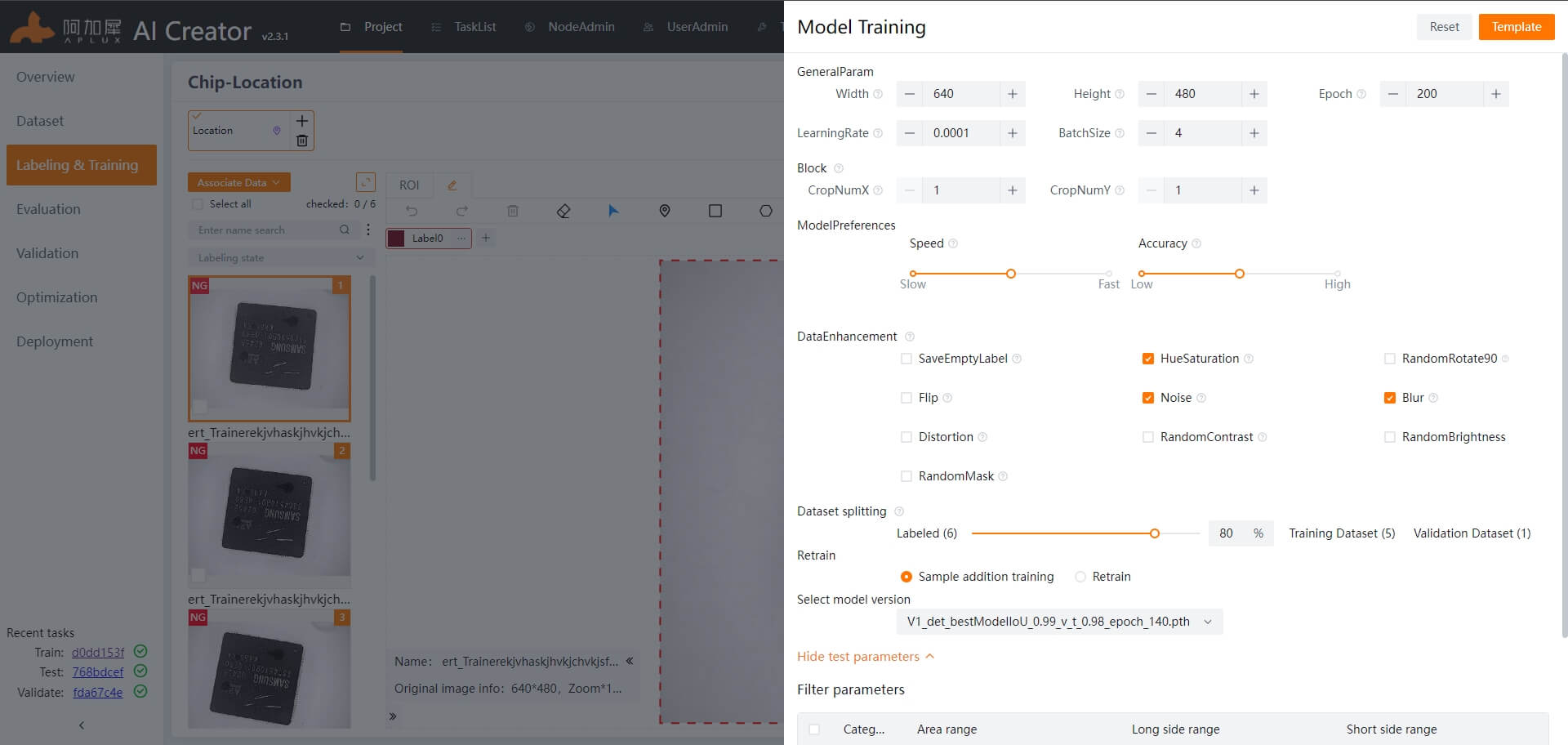

Model Training

Click the "Train Model" button in the top right corner of the page to enter the model training parameter settings.

Set the general parameters and network blocks. Width, Height, X-axis, and Y-axis blocks: These 4 parameters need to be determined based on actual conditions. The width and height are the dimensions of the input image for the model. The width and height of the training images are related to the network blocks and model input image dimensions by the following formulas:

Width * (X-axis value) = training image width

Height * (Y-axis value) = training image height

Training rounds: Set as needed, e.g., 500 rounds Other parameters: Use the default values.

Model preference for speed and accuracy should generally be set to a mid-range value.

Data augmentation uses the default value.

Set the training set ratio to 80% in the "Dataset Split" section.

For fresh training, select "Retrain" under "Training Method" For incremental training based on the previous model, choose "Incremental Training"

Set filtering parameters, select labels, and define the valid area or length range for the defect (long or short side).

After setting the parameters, click "Start Training"

TIP

For detailed explanations of specific parameters, hover over the help icon next to the parameter.

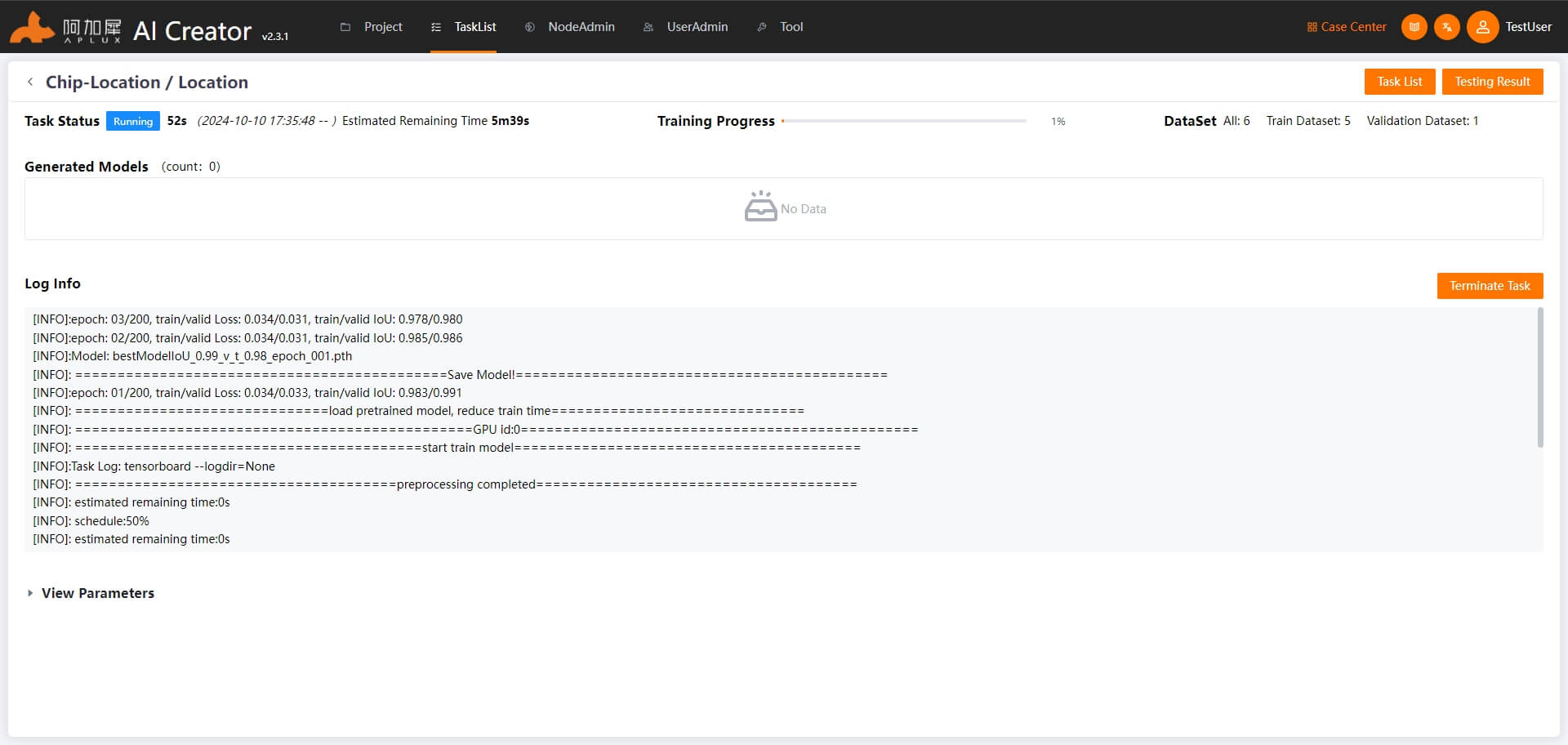

Training Process

Once training begins, the page will automatically navigate to the training details screen. Here, you can view real-time logs, such as the current epoch, and monitor train/valid Loss, train/valid IoU, etc.

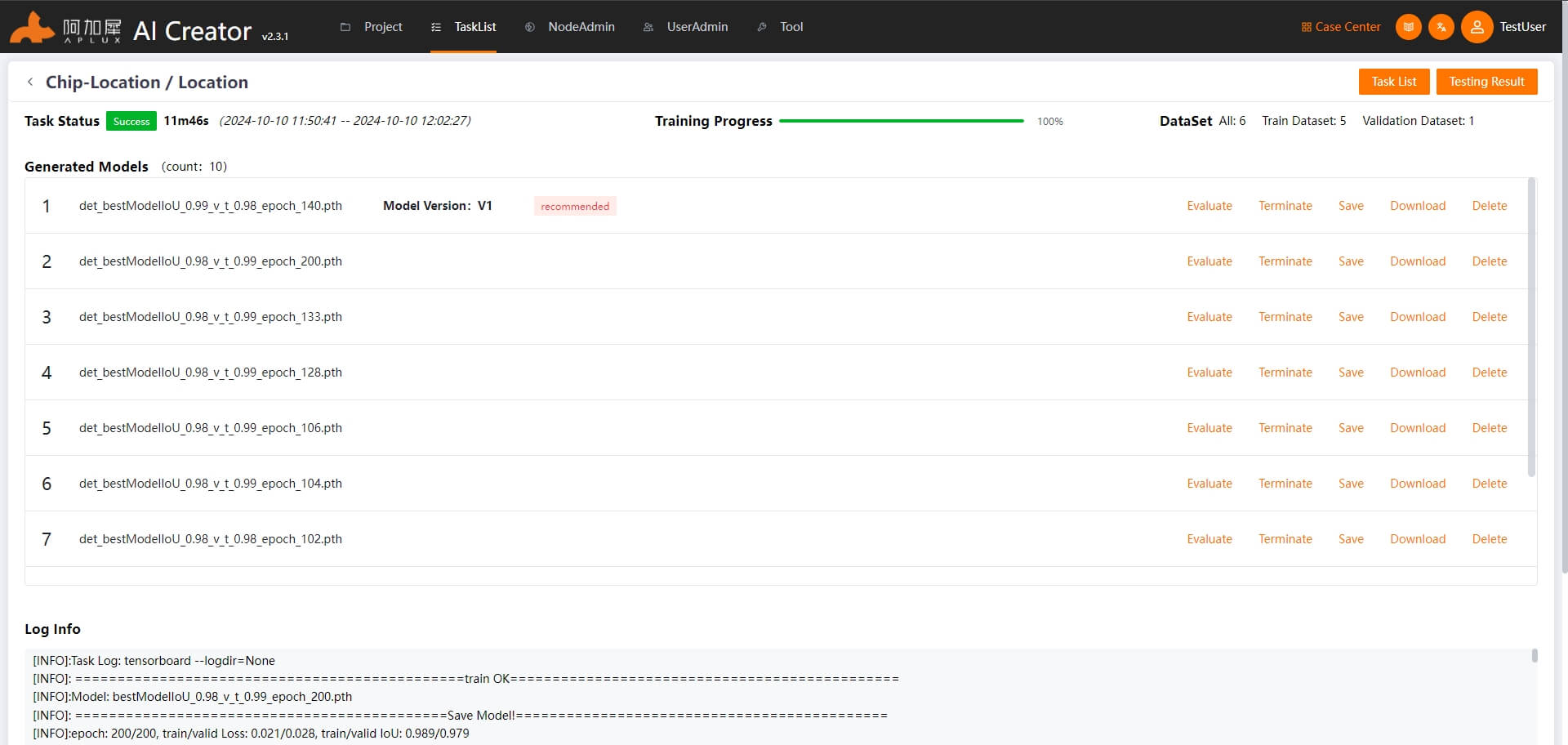

Training Completion

AI Creator will automatically evaluate and save several trained models. After training is complete, the system will select the best-performing model and archive it as a version. It will also automatically conduct an evaluation based on this model.

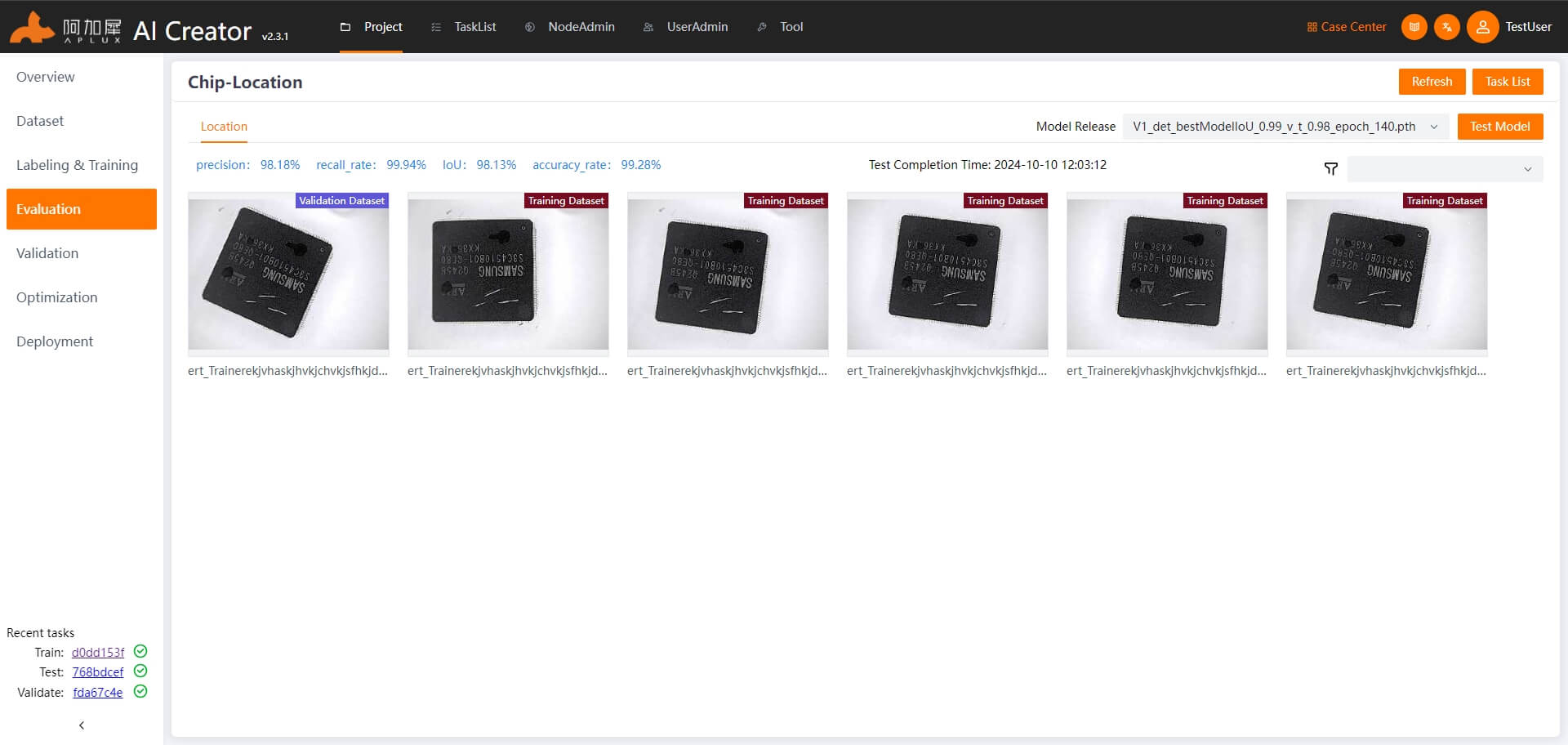

Model Evaluation

After model training is complete, the system will automatically select the best model for evaluation and calculate performance metrics.

AI Creator supports model evaluation on training images and displays the results. By default, the results are displayed as image overlays, where you can view labels, results, and ROIs.

If the model evaluation does not meet expectations, follow these iterative steps until the desired performance is achieved:

- Incremental training based on the existing model: Select "Incremental Training" and retrain.

- Add new images, label them, then select "Incremental Training" and retrain.

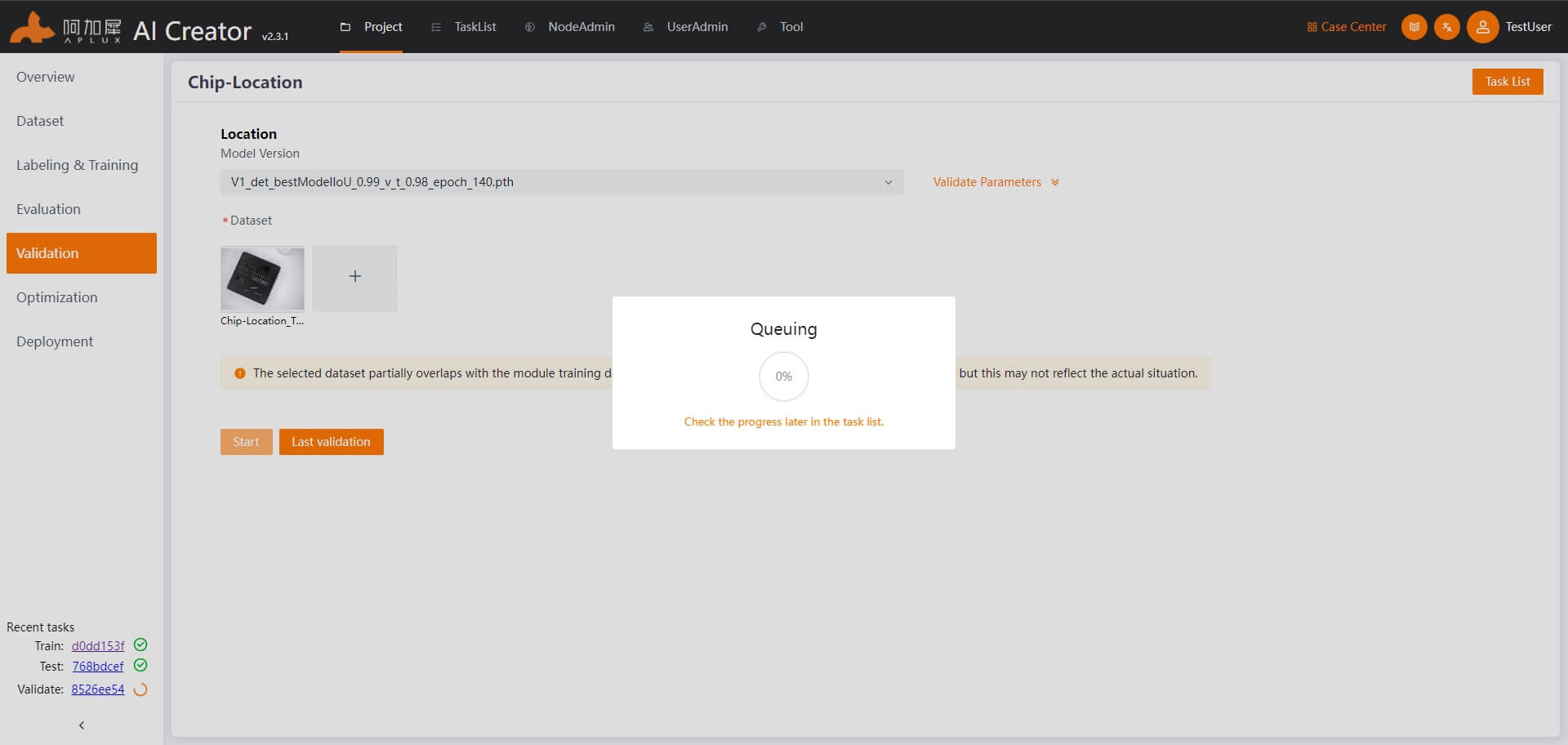

Model Validation

Once the model evaluation meets expectations, use the test set images to independently validate the model's performance.

Click "Project Validation" in the left sidebar.

In the model validation page, select the test images and click the "Start Validation" button.

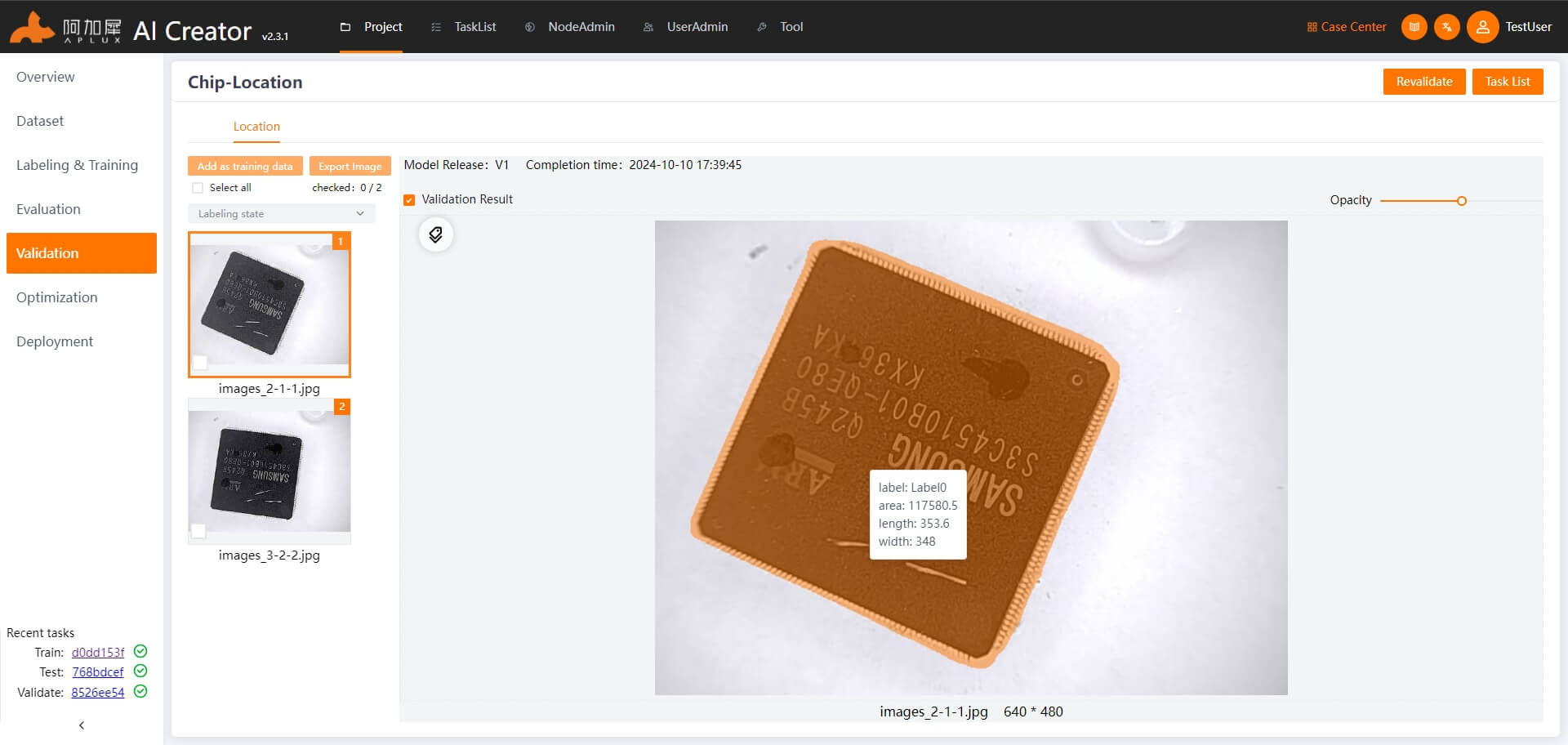

View the validation results. After validation, the system will automatically navigate to the results page.

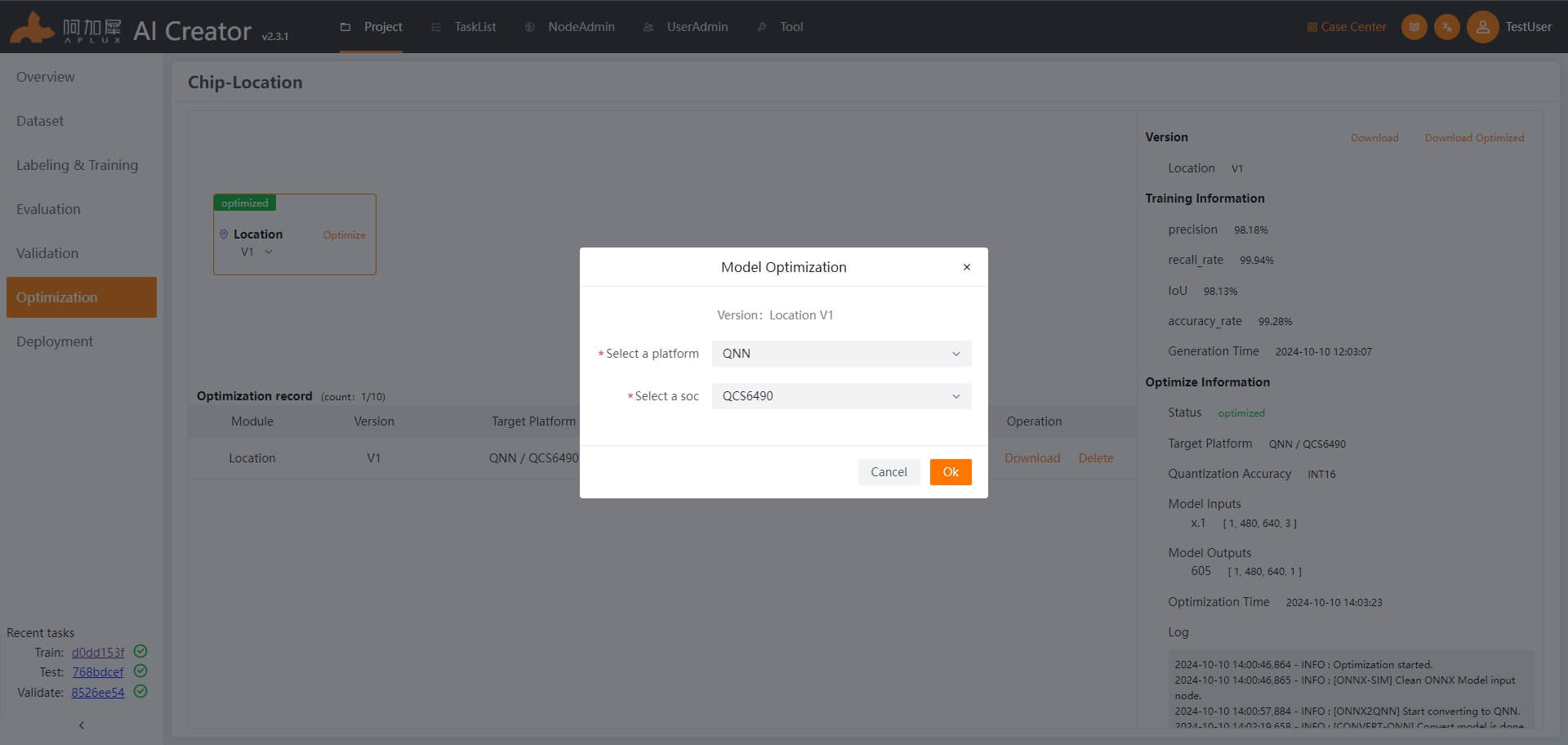

Model Optimization

- In the left sidebar, click "Model Optimization"

- The model needs to be converted and optimized for it to run on ARM-based edge devices if it was trained on an x86 platform. AI Creator supports auto optimization and advanced optimization. The location model algorithm supports both auto and advanced optimization. For the platform, select QONN, and for the chip, select QCS6490.

Deployment

Click "Deployment" in the left sidebar to enter the deployment page.

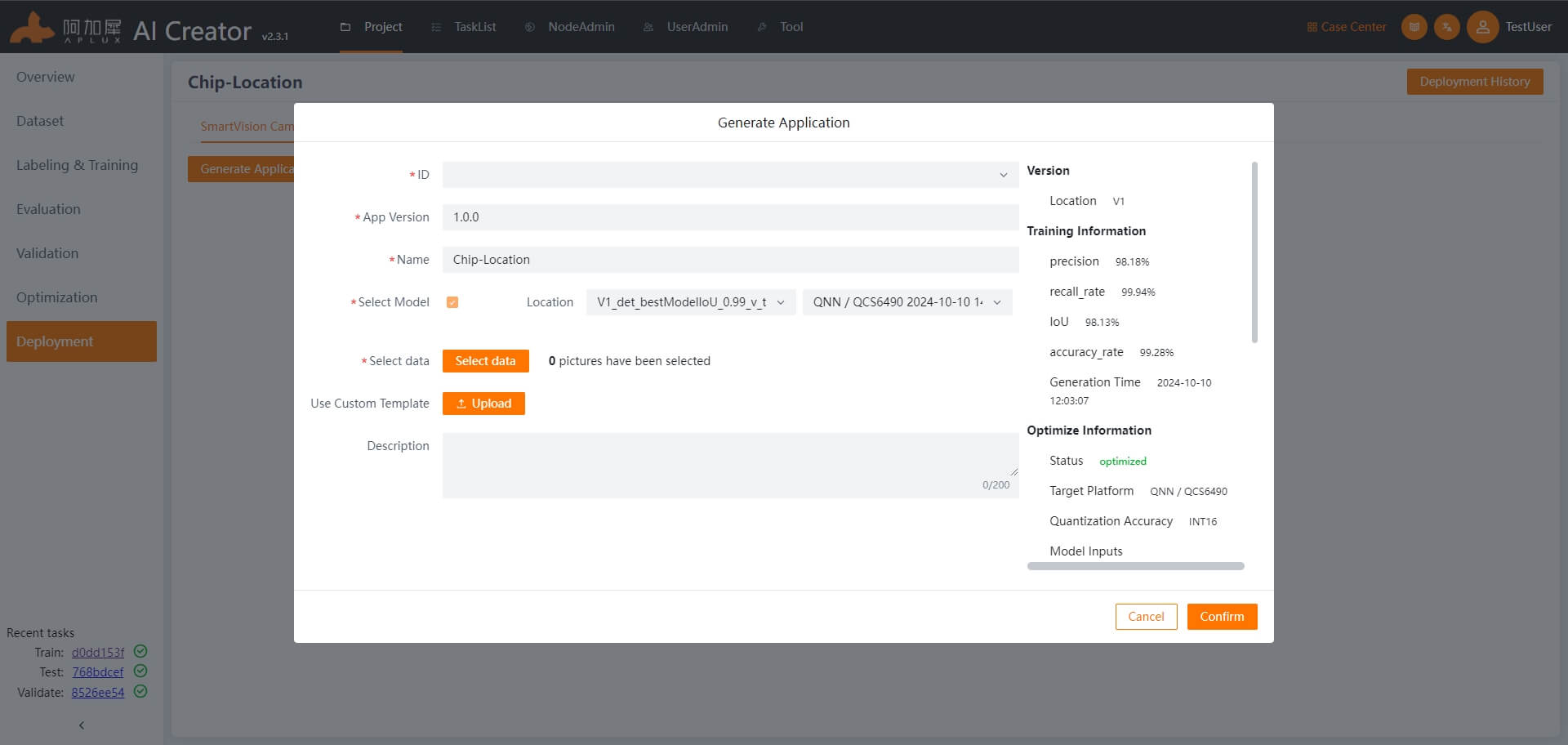

Generate Smart Camera Application

In the deployment page, click the "Generate Smart Camera Application" button. In the popup window, enter the application ID, version number, name, and description. Select the model version to deploy, and choose the data (static images used for inference by the smart camera). Finally, click "OK" to generate the smart camera application.

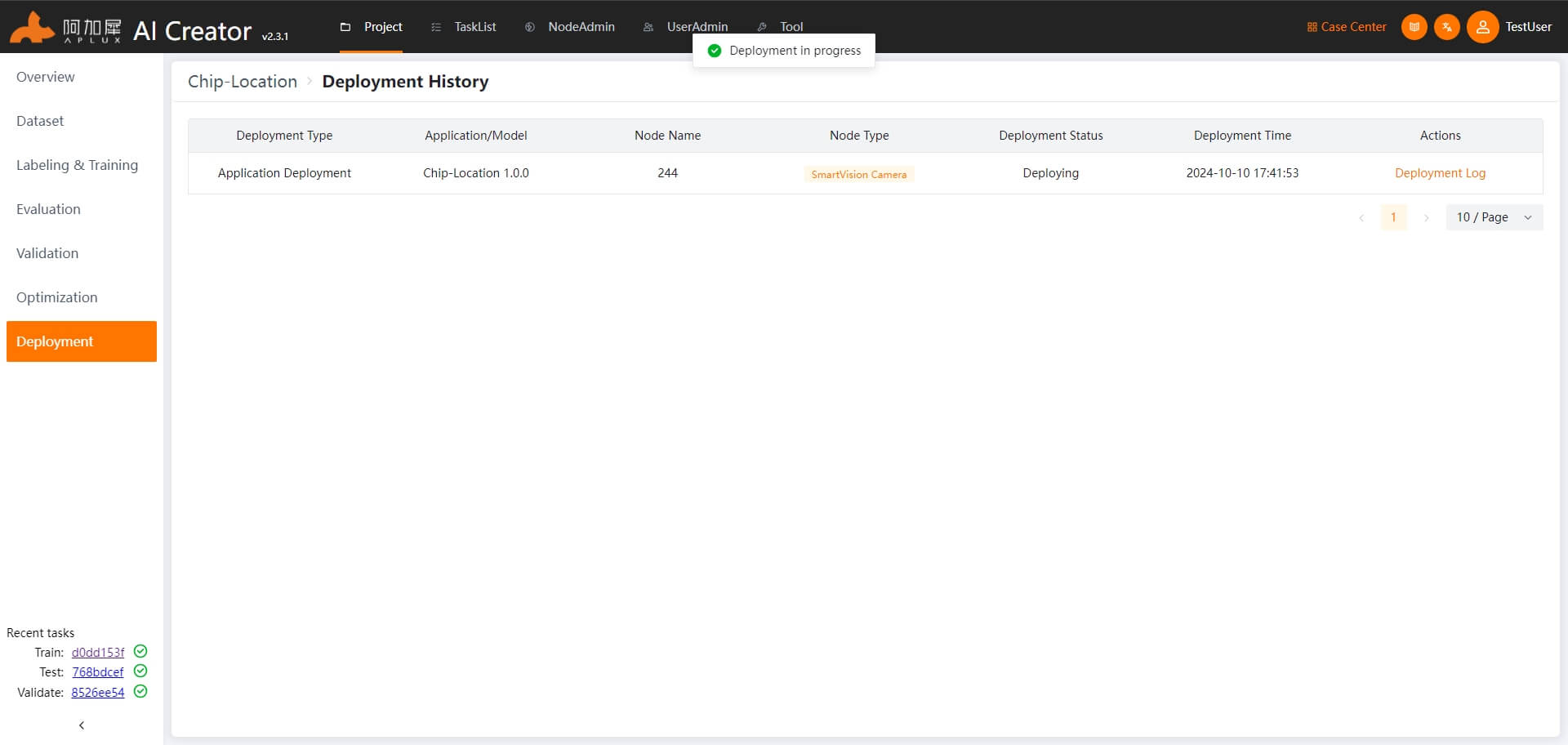

Deploy Application

In the deployment page, click the "Deployment" tab to enter the application deployment page.

Node Integration

Before deploying a model or application, you need to integrate the device nodes.

- Click the "Node Management" tab at the top of the page to enter the node management page.

- Click the "Integrate Node" button to open the integration popup.

- In the popup, choose IP integration, select "Smart Camera" as the node type, enter the node name and IP address, then click "OK"

Once integrated, the "Node Type & Connection Status" will show "Smart Camera: Online"

Return to Project Deployment Page

Click "Project Center" then in the operation list of the current project, click "Enter Project" -> "Deployment" to enter the main deployment page.

Deploy Smart Camera Application

On the deployment page, click the "Deployment" tab. In the operation list of the created smart camera node, click "Deploy Application" -> "Deploy"

Application Validation

Once AI Creator indicates that the application deployment is complete, run the deployed application on the smart camera node and view the results.

TIP

This section is not part of AI Creator functionality and is provided as a next-step guide.